Featured as part of Building Digital Twins for Physical AI With NVIDIA Omniverse course at NVIDIA’s GTC in Washington, DC on October 27th, 2025.

Real-time, high-fidelity 3D visualization is no longer just for high-end hardware and render farms. Robotics engineers, digital twin developers, and architectural designers all need to visualize and simulate large-scale, photorealistic environments. At Miris, we’re enabling this next generation of 3D experiences through our adaptive spatial streaming technology. By connecting with engines, applications, and frameworks such as OpenUSD, Omniverse, and IsaacSim, we’ve made it possible to stream large-scale, high-fidelity environments to consumer-grade devices without compromising end user experiences.

Even as 3D asset pipelines have evolved over the decades, the need for powerful local hardware remains a costly requirement. Large-scale OpenUSD scenes can reach hundreds of gigabytes in size and demand rendering resources that researchers, developers, and end users simply don’t have. This limits who can access and interact with high-quality simulations. For robotics, architecture, and industrial digital twins, that barrier means slower iterations, delayed insights, and siloed teams.

To demonstrate that these hardware and cost barriers can be significantly reduced with Miris’ AI-driven 3D streaming technology, we’ll show you how we integrate our service with Omniverse and IsaacSim and that it’s possible to interact with massive, photorealistic 3D environments in real-time, even on modest hardware.

We designed our Miris SDK for integration into a wide variety of 3D platforms, from game engines and web browsers to real-time simulation frameworks. OpenUSD’s ecosystem and diverse range of application integrations across digital asset creation tools (e.g. Autodesk Maya, SideFX Houdini, Blender) and engines and simulation platforms (e.g. NVIDIA Omniverse, IsaacSim, Unity) makes it a perfect integration point for Miris. That said, OpenUSD’s DNA as a scene description and local rendering host raises some questions about how it might be utilized as a client for streamed spatial data, especially for client devices with modest computational resources.

Miris’ adaptive streaming intelligently balances network bandwidth, client device memory, and visual fidelity — ensuring a smooth, responsive experience on everything from workstations to laptops to mobile devices. Using our proprietary AI system and NVIDIA software and hardware such as fVDB and GPUs from CoreWeave, users see the best possible view without overloading the device. This allows Miris to seamlessly balance interactivity and responsiveness with visual fidelity and computational resources. For our tests, we worked with powerful RTX 4090 laptop and RTX 5090 desktop GPUs, but also tested on older (and cheaper) GPUs such as the RTX 3070m. Below we will take you through our results.

Although we did notice a reduction in throughput when switching from the RTX 4090 to the RTX 3070m, our system was still able to stream acceptable levels of

Unlike other spatial streaming technologies, such as pixel streaming or command streaming, Miris’ data streams resolve into 3D assets on the client. This allows customers to stream over very high latency connections (up to 10,000 miles and several hundred milliseconds away). It also means that Miris data can be intermixed with local 3D data such as dynamically generated objects for IoT, robotics simulation, and augmented training data, all with 6-degrees of freedom. Having the true 3D data available locally enables users to retain full interactivity, depth perception, and the ability to mix streamed assets with local ones. This is especially powerful for robotics and simulation workloads, where streamed data must integrate with dynamic, real-time inputs like sensor feeds or synthetic training data.

Our OpenUSD integration takes advantage of its flexible Hydra renderer interface. Within an OpenUSD scene we create a MirisAsset primitive that contains information about the specific asset being streamed. Like any other OpenUSD assets, Hydra is used to determine how best to visualize our Miris Asset which is where our Miris session connection is quickly initiated and the first bytes of spatial data are streamed. This whole process often takes less than a few hundred milliseconds. Far less time than it often takes to load a typically sized OpenUSD scene.

Below, the sample python code shows how simple it can be to add a Miris Asset to an OpenUSD stage.

from pxr import Usdfrom miris import UsdMiris# Make a stagestage = Usd.Stage.CreateInMemory()# Create Miris Asset primitive with a UUID attributemiris_asset = UsdMiris.Asset.Define(stage, "/World/Warehouse")miris_asset.CreateUuidAttr().Set("fec3528d-c456-47be-83ab-ab6d9927b9c7")# Save the stagestage.Export("scene.usda")

When an OpenUSD scene containing Miris primitives is loaded, our Hydra delegate recognizes these primitives and initiates a connection to the Miris cloud service. Our runtime calculates the ideal quantity of data needed for high fidelity imaging from the viewers perspective as they move around and interact with the scene, issuing requests minimally and just in time. Then our realtime volumetric renderer draws and presents the rendered view back to the Hydra-enabled application. The first bytes arrive within milliseconds, giving users instant context while additional data streams in to progressively improve fidelity as needed. Client applications can be lightweight, loading endless assets on demand.

Miris’ cloud service accepts 2D media such as images, video and OpenUSD/OpenPBR compliant assets uploaded by customers and transforms them into 3D streamable assets accessible globally via the Miris cloud service. The streams can be consumed with the Miris SDK using integrations with common engines and applications such as Unity, NVIDIA Omniverse, and popular 3D web engines such as three.js. As with other integrations, we utilized our proprietary rendering components within OpenUSD’s Hydra to optimally render our streamed Miris data within the OpenUSD viewing context. Anywhere in an OpenUSD scene where Miris primitives are defined for a particular Miris streamable asset, we resolve that stream at runtime respecting the transformations as defined on the Miris primitive like any other object. This allows for multi-asset composition capabilities within the OpenUSD scene.

OpenUSD is becoming the standard across industries in describing, composing, and exchanging 3D assets in its overarching goal to make 3D data interoperable, portable, and open. At its core, OpenUSD enables every creative, simulation, and AI pipeline to speak the same geometric and semantic dialect regardless of industry.

OpenUSD compliments Miris’ mission by acting as the connective tissue between the upstream 3D asset creation process and our ability to deliver that 3D assets at scale. By embracing OpenUSD as a native input and interchange format, Miris can:

Together, OpenUSD and Miris’ spatial streaming complete the missing link between 3D asset creation and the ability to scale the delivery of those assets at million-user scale. This enables a true: “create once, stream anywhere” future for 3D assets.

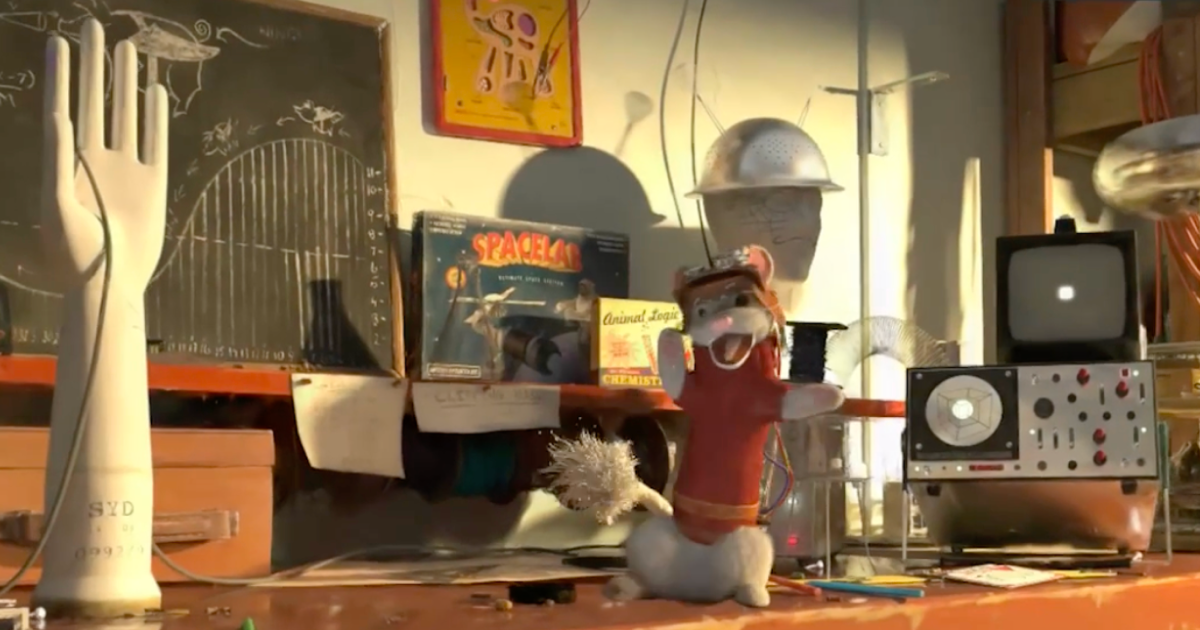

At Miris, we’re always pushing the boundaries of 3D streaming, AI, and related technologies. Many use cases exist for streaming not just static assets, but assets with motion as well. Look for future developments from us around such use cases and we hope you enjoy the sneak peek below as much as we’ve enjoyed making it. Although we don’t anticipate much demand for dancing stoats (a stoat is apparently a type of weasel) in IsaacSim, we couldn’t resist.

We’re continuing to collaborate with partners across industries to scale 3D streaming for simulation, design, and visualization. If you’re building with OpenUSD, Omniverse, or IsaacSim and want to explore how adaptive streaming can change your workflows — get in touch!

Want to help build the future of streamed 3D content? We’re hiring!

#GTC25 #NVIDIAOmniverse #IsaacSim #OpenUSD #Robotics #ROS2 #DigitalTwin #CloudXR #SpatialComputing